Building a Portable Low Cost Tangible User Interface Based on a Tablet Computer

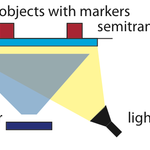

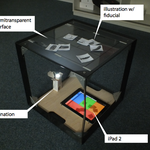

For our second “Indepenent Coursework”, I have written a paper about how to build a “Portable Low Cost Tangible User Interface Based on a Tablet Computer.” It evolved from the basic idea, that recent tablet computers like the iPad, that are equipped with a camera, can be used to create a table-top Tangible User Interface (TUI) providing an user interface with physical objects to interact with. Such a device can be used for games, education, museums and so on. It is independent from an external power source since it runs from battery power and in contrast to existing TUI devices is cheap, low-weight and therefore portable. To achieve this, the open-source computer vision framework reacTIVision has been utilized and was ported to the iOS platform so that it runs on Apple iPads and iPhones. This modified version was named iReacTIVision and is available on github.

The abstract of the paper reads as follows:

This paper covers the topic of how to use a modern tablet computer with its integrated camera to implement a tangible user interface (TUI) , which can become very handy in cases where battery-driven power supply, low weight and portability are crucial. It is important to mention, that the tablet is used both as application backend to process camera input, identify and track tangible objects like markers, touches and so on, and as application frontend that receives the processed information about these objects (like position and rotation of markers) and provides feedback for the user. For this work, the Apple iPad 2 has been used to implement and test this approach, while other tablet devices running for example with the Android operating system, might also be adopted to this field of application. This work is separated into three parts: The first gives an overview about the basic idea as the fundament of this work as well as a short introduction to the fundamentals of tangible user interfaces. It covers basic image processing for fiducial recognition and also the protocols used in such applications to provide the thereby extracted information to the application frontend. The second part shortly describes the possible usage of the reacTIVision framework on a tablet computer and the third part describes the implementation of a prototype for the initially proposed idea. In the last section a conclusion is made and further development is discussed.

You can download the paper here:

Source code for iReacTIVision is available on github.

← Back